Introduction

Training a deep learning model takes a lot of time and effort. Several factors, including the architecture, depth of the model, and the size of the dataset, affect the time taken to learn. Also, we need to take into consideration the effort we put into hyperparameter tuning and comparing results between the optimizations performed. In our quest to find optimal parameters, we end up using a grid search (/random search/ Bayesian search). Grid search is a method used to find the best combination of hyperparameters for a model by looping through the entire set of possible parameters. This significantly increases the number of models trained. Keeping track of results from all these models can be quite confusing and strenuous.

I’ve learned over time that, in order to get best results from the grid search, we need to efficiently monitor the training process and identify the best models. Hence, through a blog post, I wanted to share my approach to continually monitor and keep track of results from several models. These approaches include using TensorBoard, writing custom logs, and building slack notifiers. We can easily integrate these techniques into deep learning frameworks like Keras, Tensorflow, and PyTorch. Let’s get started!

TensorBoard

TensorBoard is a visualization tool. Equipped with the ability to generate and display live graphs during training, it makes the life of deep learning practitioner easier. Along with accuracy and loss curves, it can visualize the neural network graph. It is easy to install and integrate into any training script. Here, I explained how to use TensorBoard with Keras.

Installation: pip install tensorboard via command prompt/ terminal.

In the script, call TensorBoard module from ‘keras.callbacks’. Now, initialize a TensorBoard object and pass it as a callback to the ‘model.fit’ function.

If you are using a grid search, it is better to generate custom names for the logs dynamically and pass them into the TensorBoard object. For instance, if I am tuning for the optimization algorithm, number of epochs and batch size, I can name my logs as ‘SGD-50-1000’ and so on., where SGD is the optimization algorithm, 50 is the number of epochs and 1000 is the batch size. It can be performed by separating the training function from grid search function. For every combination of the grid search, the train function will be called and the combination will be passed as its parameter.

Once you start the training script, open a command prompt and trigger the TensorBoard.

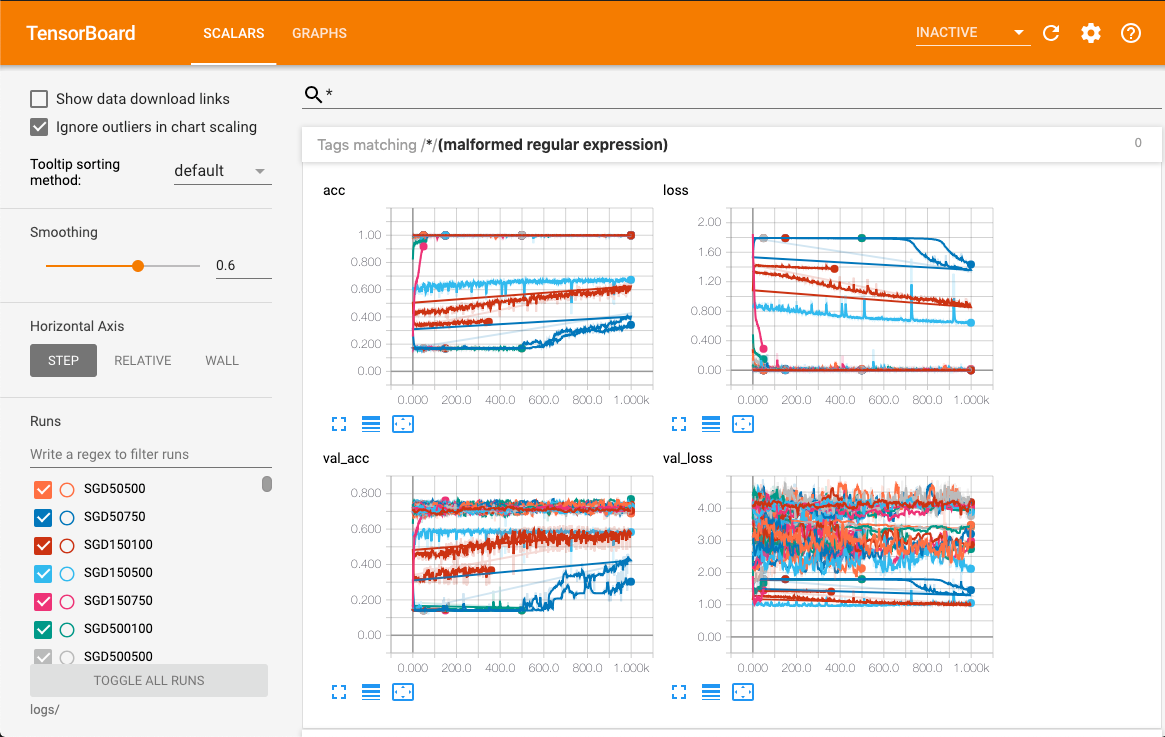

Now, you open the TensorBoard via your browser at localhost:6006. Below is the screenshot of my TensorBoard. It gives users a wide range of options to tweak the graphs and compare the performances of different models.

On the left-hand bottom corner, you get the option to select models you want to compare. On the right pane, you can see four graphs that will be crucial for the decision-making; training accuracy, training loss, validation accuracy, and validation loss. TensorBoard lets you expand graphs and inspect performance at any given iteration. This where our custom names come handy! You can easily eliminate underperforming combinations for the grid search and start tuning for the ones that look better. By now, you would have spotted that few of these models are overfitting the dataset. In the next iteration, you can take necessary measures like regularization, data augmentation, etc. to avoid the problem.

If you are training your models on a remote server, you can create an SSH tunnel on your local machine.

ssh -NfL 6006:127.0.0.1:6006 user@remote-server

Custom Logs

Writing logs could seem trivial at first. But, writing custom logs could help you identify the shortcomings of your models. I write logs at two levels. First one is a high-level summary of each hyperparameter optimization search which looks something like this.

Second is a detailed log for each iteration. I name it after the hyperparameters used. For instance, if I’m trying to tune for the best optimization algorithm, the number of epochs, and batch size for a multiclass classification problem – the log would be named algorithm-epochs-batch_size.json, and it would consist of:

- Train, Validation & Test accuracy

- Train, Validation & Test loss

- A dictionary with detailed predictions from the test set. Below is a sample template for the log.

The test predictions dictionary has components that relate to each class as well as IDs from the test set which have been misclassified. Let’s take a deeper dive into what each component means.

“cat”: {“cat”: 90, “dog”: 10, “eagle”: 0}

For all the cat images from the test set, 90 have been accurately classified as a cat while 10 of them have been incorrectly classified as a dog and none as an eagle.

“_misclassified_”: {“id-1”: [“cat”, “eagle”], “id-2”: [“cat”, “dog”]}

Each misclassified ID is mapped to a list with its actual class followed by its predicted class. Other metrics like false positive rate, false negative rate, and confusion matrix can be added depending upon the problem we are trying to solve. Along with these logs, we can plot the accuracy & loss, and save them to the disk. Custom logs can be obtained using simple for loops while comparing predictions with actual values.

Slack & Email Notifier

Building a slack notifier is pretty straightforward. You need to set up an application on https://api.slack.com/apps. Let the instructions be set to default and create an app. From the settings your bot page you can get the incoming webhook, slack incoming user and incoming channel. With this information, you can do ‘requests.post’ from python.

You can trigger the notifier each time the model is trained with a different set of hyperparameters and make it send a summary of the results to your slack channel. You can build an email notifier with using MIMEMultipart . In the below program I’m attaching a zip folder with custom logs and results from the grid search.

Conclusion

One technique that I have not shared here is using scikit-learn for grid search in parallel. The GridSearchCV function in scikit-learn can train and test several models simultaneously. You can specify the number of cores you want to use through the n_jobs parameter.