Introduction

Last week at the RSA, we had the privilege to meet incredibly smart investors, potential customers and security researchers who stopped by our booth. I was asked several interesting questions about how Bolster Platform’s AI engine works and one of the most common questions was, Can the bad actor use GANs to beat your AI engine? I decided to address this question through this blog post which explains in detail my experiments with GANs, comparisons with other state of the art models and their impact on phishing detection.

At Bolster, we have been dabbling with GANs for quite some time now. A couple of weeks ago, I conducted an experiment on generating fake Nike shoes. I used a basic GAN architecture and the algorithm was trained on Amazon EC2 P2 instance. Before we get into the details of the experiment, let us take a look at what GANs are and how they work.

What are GANs?

Generative Adversarial Networks (GANs) are a subset of Machine Learning algorithms with the ability to generate synthetic content that resembles real-world data. For instance, GANs can create a fake Picasso painting or images of a cat that does not exist.

How do they work?

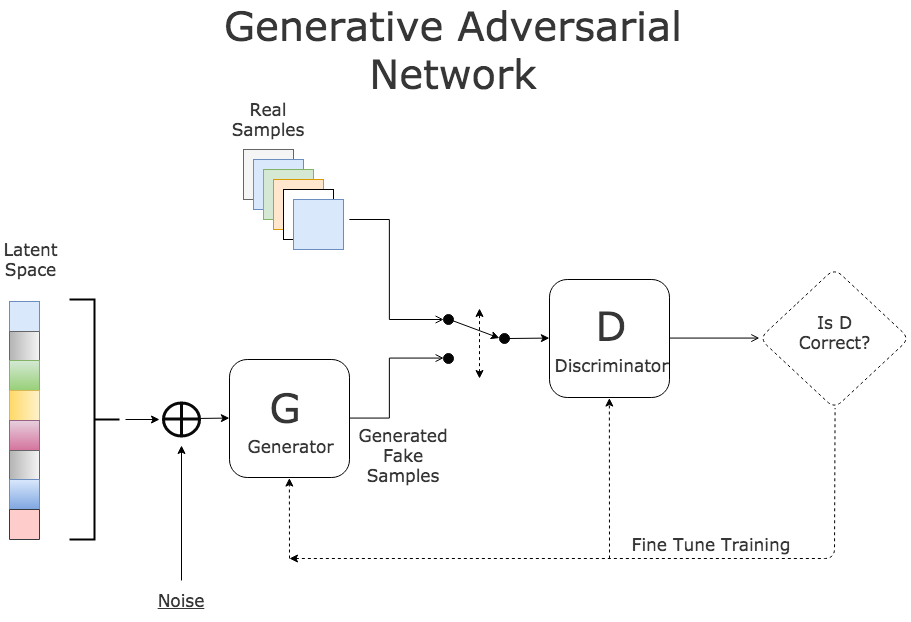

A GAN is made up of two neural networks, generator, and discriminator. While a generator produces new content, a discriminator verifies the produced content for authenticity. Let me explain this with the analogy. That of a FBI agent and a counterfeiter trying to manufacture fake Nike shoes and sell them in the market as original ones. The job of the FBI agent, is to verify all the Nike shoes and only let the original ones into the market. The job of the counterfeiter, is to make shoes that look like the original ones, and fool the FBI agent into allowing them into the market. As time progresses, both of them get better at what they do because they are trying to outsmart one another. This is how the generator and the discriminator in a GAN learn. In the process of trying to beat their counterpart, they keep learning and getting better.

Fig-1: GAN Architecture. Credits: KDNuggets

You might wonder, how pitting two neural networks against one another is useful for any real-world applications. The value of a GAN lies in how good its generator network has been trained. Since being introduced in 2014, GANs have gained immense popularity in several fields such as fashion, art, and music. This can be attributed to their ability to think creatively.

Fake Nike Shoes Generation

In order to train a GAN to produce fake Nike shoe images, we need a dataset made up of real-world shoes. I downloaded around 1000 Nike shoe images from google and resized them to 128×128. The idea of the experiment, was to see how easy/difficult it is for a GAN to generate images that resemble the real-world. The GAN has been trained for 50,000 iterations on an Amazon EC2 P2 instance for a week. After every 10,000 iterations, I plotted the outputs from the generator in an 8×8 grid (64 images).

Fig-2: Generator output without training

Fig-3: Generator output after 10000 iterations

Fig-4: Generator output after 30000 iterations

Fig-5: Generator output after 50000 iterations

At iteration-0, the generator has not started learning yet and it outputs random noise. You can see the evolution of the generator from iteration-10000 to iteration-50000. Although some of these images start looking like Nike shoes, they are not close to resembling what exists in the real-world. One interesting observation is the 6th shoe in the top most row of iteration-50000. The GAN was able to generate a Nike logo and place it accurately on the shoe. As humans, we can instantaneously come to a conclusion that they are not real Nike shoes.

We can make the GAN generate better images by training it longer, using a larger dataset (millions of images) and using a deeper network. For instance, NVIDIA trained a StyleGAN that can create images of a person, a cat or a bedroom that looks exactly like a real-world image. Below is the time and resources that the training process consumed.

GPUs: Tesla V100

1024×1024, 512×512, 256×256: Resolution of training images

Credits: From NVIDIA’s StyleGAN GitHub page

To generate an HD image that looks like one from the real world, it took them 41 days of training on a single GPU. The problem with GANs is the amount of resources it takes to train them. However, it is certainly possible for a motivated bad actor to generate high quality images that can beat any basic AI system. For example, a computer vision system that relies on image feature based matching can be easily bypassed by GAN generated images.

Conclusion

We believe the future of online scams will leverage automated fake image generation using GANs or other similar means. These techniques give bad actors an advantage of creating realistic fake websites at scale. In the near future, it wouldn’t be uncommon to see websites with fake logos or other artifacts infringing on a brand’s trademarks.

At Bolster, we’re acutely aware of limitations that current AI systems have in the face of GANs. We firmly believe there is no silver bullet in beating motivated bad actors with advanced tools. Therefore, our detection technique does not depend on a single algorithm. We’ve built an ensemble of models leveraging on both images and text of a website to detect phishing and counterfeiting. Please do checkout Checkphish.ai if you want to scan suspicious links. Please spread the word if you like it.