The search for a transformer based backbone model suitable to handle vision tasks has been going on for a while now. There have been multiple transformers proposed to solve different challenges in adopting SWIN transformers for computer vision.

A critical challenge that impedes the performance of transformers for vision tasks is the difference in variation of scale in images and text data. Images as of now are acquired in high resolution, which makes variation of scale in different visual entities even more challenging.

Convolutional Neural Networks (CNNs) have been the go-to neural networks to solve problems in computer vision. CNNs offer the learning through visual kernels which learn the underlying feature maps to find the feature patterns using the convolutional operations.

CNNs solve the problem of scale variation using different techniques like downsampling through max-pool layers and feature pyramids. The feature pyramids allow the network to process input images at different scales. This allows for detection of smaller level features like small scale entities in object detection or pixel level features for image segmentation tasks.

When it comes to NLP tasks, the variation in different words of a input sequence is far less than the vision tasks. For example in english language, the largest word is composed of 45 letters and smallest word is 1 letter.

In computer vision, the variation between objects can be up to 1000 pixels. Clearly for transformers to be effective in solving the computer vision tasks efficiently, it is important for them to be able to handle scales. The transformers however, have not been invented on similar theoretical base. Hence, it is where the model for SWIN transformer comes in to form a bridge between the two theoretical bases.

Motivation Behind Shifted Window-Based Transformer

Transformers have been able to change the landscape of machine learning in past years. For NLP tasks, Transformers are now the default family of models to accomplish tasks from text classification, sentiment analysis to even generating answers when prompted with questions.

The advantage of transformers have been their ability to contextualize very long sequences of texts, with the help of a mechanism called self-attention. The idea is to process the text as an input sequence and divide it into smaller tokens of texts.

Every single token in this sequence is then compared to every other token to find the relationships among them. The model learns to associate appropriate weights to all the tokens in the sequence that contextually correspond to current token the most. This is where transformers are really good at capturing global contexts.

For computer vision tasks, CNNs have been the state of the art model. They have the capability to capture deep visual patterns imperceptible even to the human eye; these patterns known as features are aggregated by CNNs to solve vision related tasks.

CNNs rely on convolution kernels as the feature extractor. These kernels process a local patch of an image at a time as can be seen in figure 1. This tendency of the patches to focus only on a small receptive field out of the entire image at a time, limits it’s capability to gather the understanding of the global context in images.

Transformers on the other hand are great at gathering global contexts. Transformers also have a much larger learning capacity than traditional CNNs. Due to these capabilities, researchers have been trying to explore methods to bridge the gap between transformers and vision tasks. The Vision Transformer is the pioneer work towards achieving this goal.

The vision transformer processes an image by dividing input image into smaller patches as seen in Figure 2. These patches are treated the same way as tokens in a text sequence and are called visual tokens.

The patches are fed sequentially to the vision transformer model and the weights are adjusted by finding the relationship between the patches. Since each patch is compared to every other patch to find the relevant contexts, the processing time complexity of vision transformer increases quadratically with image dimensions. This makes it unscalable to process images of high resolution. Furthermore, image patches are only computed at fixed scale whereas visual entities in an image are highly variable in scale. This makes it difficult to train vision transformers on tasks dealing with small visual entities like object detection and pixel level patterns like image segmentation.

SWIN Transformers addresses both these challenges by proposing hierarchical processing of image tokens using shifted window patches with linear complexity to image size. The SWIN transformer processes input image tokens at multiple levels while keeping the number of patches generated same.

Layer Architecture

The key contribution of SWIN transformer is the shifted window mechanism. The solution is two pronged as it solves both the challenges, it helps the model to extract features at variable scales and also restricts the computational complexity with respect to image size to linear. The shifted windows mechanism divides the image into windows, each window is further divided into patches.

Each window block is divided into these patches and fed to model in same way the vision transformer processes the entire input image. The self attention block of the transformer computes the key-query weight for these patches within these windows.

This helps the model emphasize on smaller scale features, however this raises another challenge, since the relationship between the patches are computed within the windows self attention mechanism is unable to capture the global context which is a key feature in transformers. The problem of linear computational complexity also remains unaddressed.

In first layer, the image is divided into windows denoted in the figure by red boxes. Each window is further divided into patches denoted by gray boxes.

In second layer, this window is shifted as depicted and these windows are overlapping with the windows divided in previous layer. As it can be noted, the number of patches remain fixed, which ensures that the computational complexity remains linear with image size.

Moreover the overlapping nature of the windows ensures that the patches which were not paid attention to in previous layer due to being in different window, can be emphasized on in current layer. This ensures that global context is captured through these connections bridged from the previous layer.

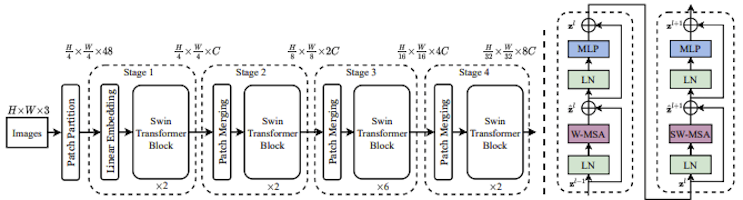

First the input RGB image is split into non-overlapping windows, each patch is treated as a token and converted to raw pixel values as its feature set. The patch size is 4×4, hence the feature set dimensions become 4x4x3=48. A linear embedding layer converts the patch into embedding token of dimension C. These tokens are fed through several transformer blocks, these blocks maintain the number of patches to be H/4 x W/4. This set of transformer blocks is called stage 1.

Shifted Window Multi-Head Self Attention

It can be clearly seen that the multi-head self attention MSA varies quadratically with the image dimension hxw. Whereas, shifted window multi-head self attention W-MSA varies linearly with the image dimensions hxw if the variable M is constant. This makes SWIN transformer more suitable for training on images with higher resolutions.

The downside to window-based self attention is the lack of self-attention connections outside the local window, which limits the model’s capability to capture the global contexts. The SWIN tackles this problem by shifting the windows with respect to each other in successive SWIN blocks. There are two window partitioning configuration which are alternated between two blocks of a particular stage.

The first block partitions the image regularly starting from the leftmost pixel, and dividing the 8×8 feature map into a 2×2 window grid where each window is 4×4 dimension (M=4). For the next block this window is shifted by M/2, M/2 pixels with respect to windows in previous block. This shifting now allows cross window connections which helps in gaining a global context for the relationships between the patches across the windows. To illustrate the processing of the image input sequence by the two blocks following equations come in handy.

The zl’ and zl are feature map outputs by the MSA block and the MLP block respectively, zl and zl+1 are the outputs from two consecutive layers of SWIN transformer blocks. One issue with shifted window partitioning is visible in the figure 4, where the second configuration has more windows as compared to the first configuration, it can also be seen that the some windows are smaller in second configuration.

SWIN transformer architecture solves this problem using cyclic shifting windows, where the windows on the fringes are padded with each other. This is called the cyclic shift padding depicted in figure ___

Results and Experiments

The performance of the SWIN transformer model has been analyzed and compared against state of the art CNNs and Vision Transformers for all mainstream computer vision tasks like image classification, object detection and image segmentation.

There are 4 variants of the SWIN transformer architecture, which vary in number of layers and the input dimension C of the input token sequence after linear projection. The different variants can be categorized as below

For image classification, the SWIN transformer has been evaluated against the state of the art CNNs like RegNet and EfficientNet and also against transformers like ViT and DeIT. The benchmark dataset utilized for this comparison is the widely accepted ImageNet 1k, which has 1.28million training images along with 50k evaluation images for about 1000 classes. SWIN-T outperforms DeIT-S architecture by 1.53% for 224×224 input for the top-1 accuracy metric

For object detection, COCO 2017 dataset benchmark is used to judge the performance of SWIN and compared against other backbones. For object detection, only the state of the art model backbones like ConvNeXt and DeIT are compared with SWIN.

The framework used to predict bounding boxes with these backbones is the cascade mask-RCNN. SWIN-B achieves a high box detection average precision of 51.9AP, which is a 3.6 point gain over what ResNeXt101-64x4d exhibits. On the other hand SWIN-T outperforms the DeIT-S model of similar size by 2.5 box AP points.

For Image segmentation, the benchmark dataset ADE 20k is used which consists of about 150 classes distributed across 20k training images, 2k validation images and 3k testing images. SWIN is compared to DeIT and ResNet 101 models. SWIN-S exhibits 5.3mIoU points higher the DeIT-S model of same computation cost. It also outperforms the ResNet-101 by 4.4 mIoU points.

Conclusion: Vision Transformers for Cyber Defense

Vision Transformers are an emerging method in solving computer vision tasks due to the immense success of transformers in the NLP domain. However, vision transformers are impeded by some fundamental issues arising out of differences between text data and image data.

The variation of scale between various visual entities is higher in the image data. Vision Transformers also use the same fixed scale token strategy used by transformers in NLP domain, unsuitable for extracting feature patterns for the small scale visual entities. Vision transformer have quadratic computational complexity against image size, making it unsuitable for processing high resolution image data.

SWIN transformers solve this problem by proposing images to be broken in windows, these windows are further broken down into smaller patches, these patches are fed as an input sequence and attention is calculated only by creating self attention connection locally. The self-attention connection with patches in other windows is achieved by shifting the windows in next block and allow the flow of connection from previous block.

Between the stages, the window size is decreased to allow creation of hierarchical maps. Since the patches are fixed in number, the computational complexity against the image dimensions is restricted to linear, making SWIN more suitable than traditional transformer.

To learn more about Bolster’s Research Team, and to see how generative AI can help protect your business from cyber attacks, speak with the Bolster team today!

References