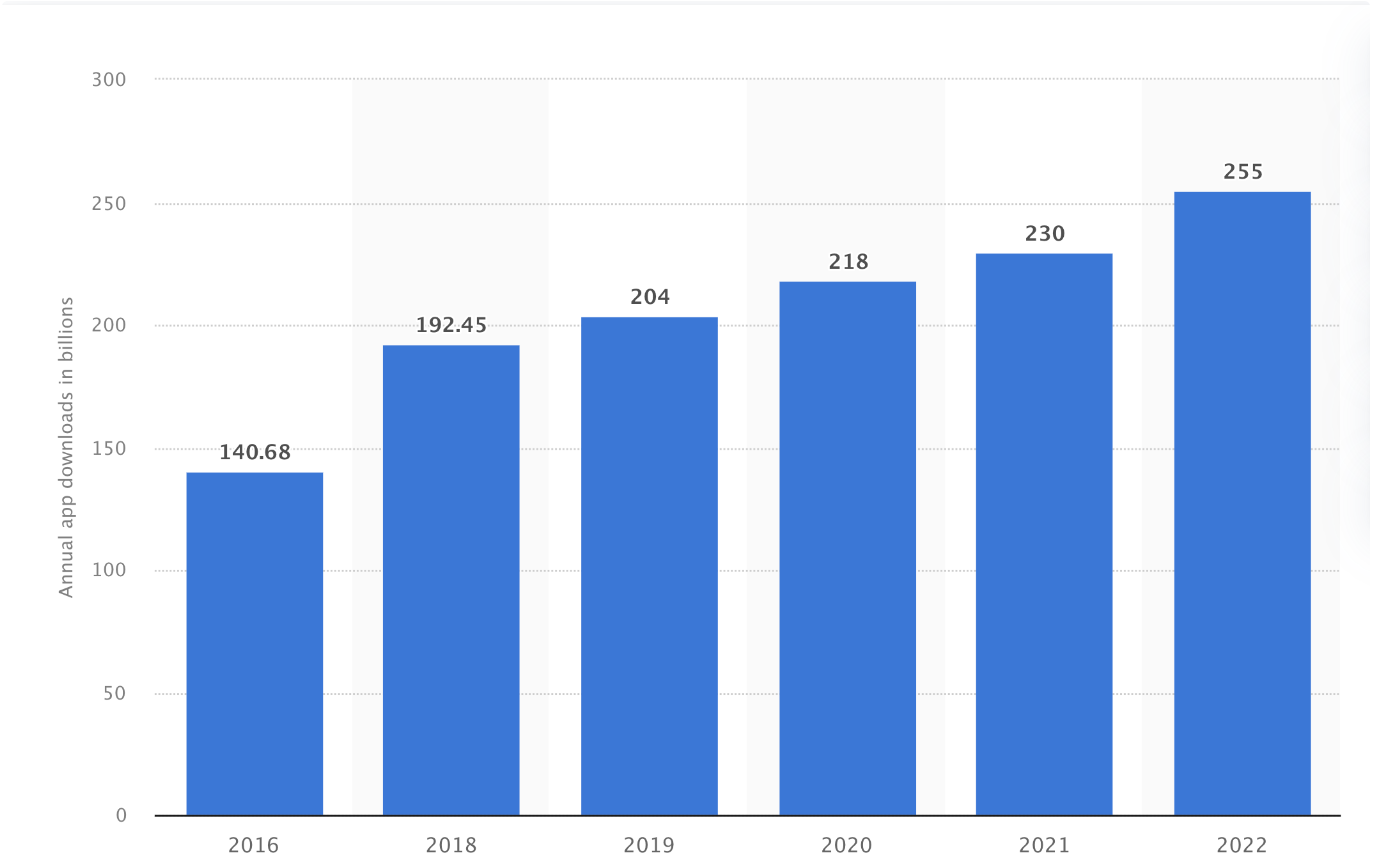

As part of our Large Language Models at work blog series, we now delve into how we integrated the generative AI capabilities of LLMs to automate our critical takedown processes. But as we’ve seen with other technological advances, as the popularity of apps have grown, so have the cybersecurity threats related to them, and the fake app takedown procedures.

Fake App Takedown Procedures

Consider this scenario. It’s 2004, Joe has to attend an important meeting and in the streets of Manhattan, he is trying to convince a taxi driver to help him reach his office, where he will be attending his meeting after picking up coffee he ordered via a phone call and will be firing up his laptop to check on latest stock market trends.

Fast forward to 2023 and Joe can use an app on his smartphone for each of these tasks. According to statistics published by Statista, 218 billion app downloads were recorded in 2023, which was a roughly 7% increase from 2022.

Businesses, especially B2C vendors, are increasingly reliant on mobile apps to engage traffic and target their key demographics.

With this reliance on app technology also comes the threat of phishing attacks on brands utilizing the convenience of apps.

Most large scale brands face an overwhelming phishing onslaught by malicious entities, who modify and crack the original apps and host them on independent app stores. These apps can result in stealing of sensitive information of customers downloading these cracked apps and transmitting of malware and viruses. For businesses, these attacks not only steal away their potential customers but also often break their paywalls for premium content.

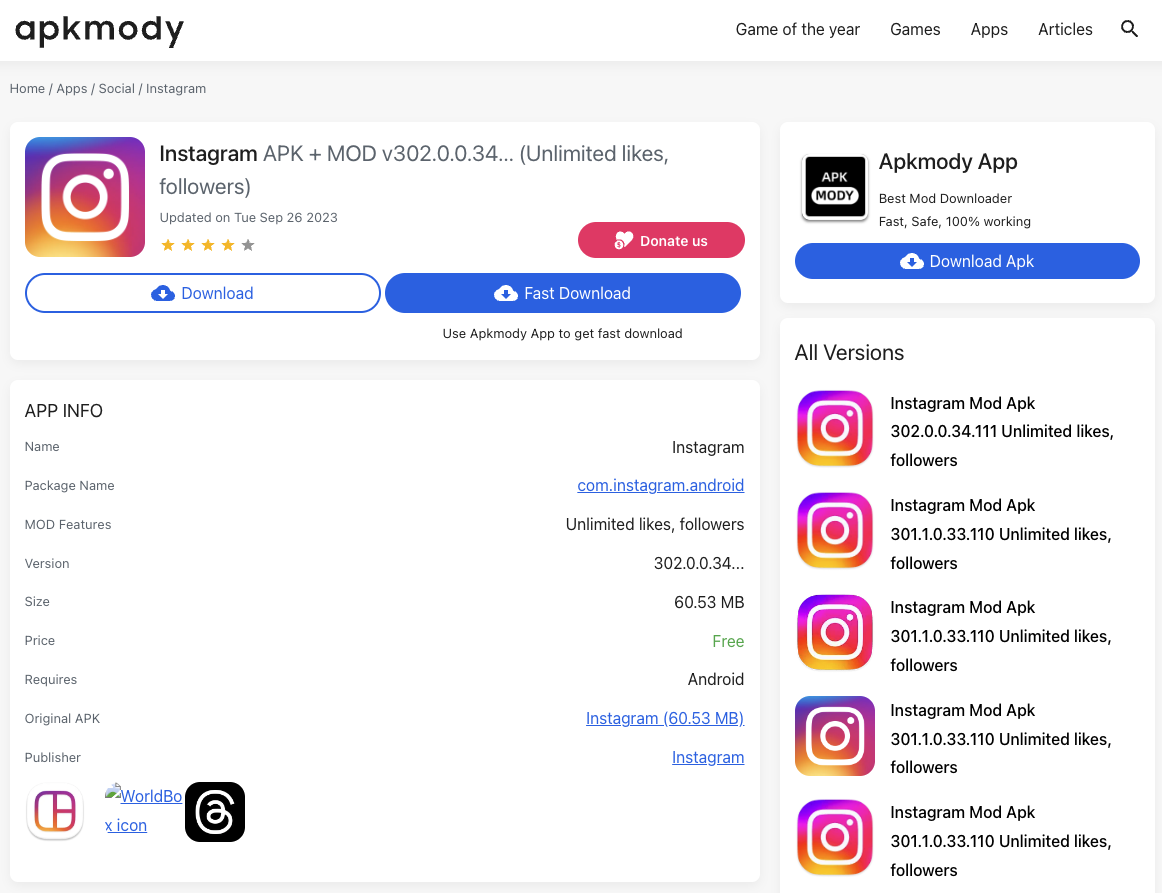

An example for such a phishing attack we have found can be seen on the app hosting platform “apkmody”. The platform is hosting a modified or cracked version of Instagram, furthermore they make promise of free unlimited likes which is misleading. We assess and classify the damage caused by these into two categories:

- Direct damage : These include explicit account takeovers (ATO) after a user signs up throught their malicious platform and stealing of sensitive financial information of users such as credit cards and bank account information.

- Indirect damage : These attacks compromise user devices to turn them into botnets to be used as mules for DDoS and VPNs.

These malicious attacks target Instagram to steal its customer base and also steal crucial information of users.

Using Technology for Fake Mobile App Takedown

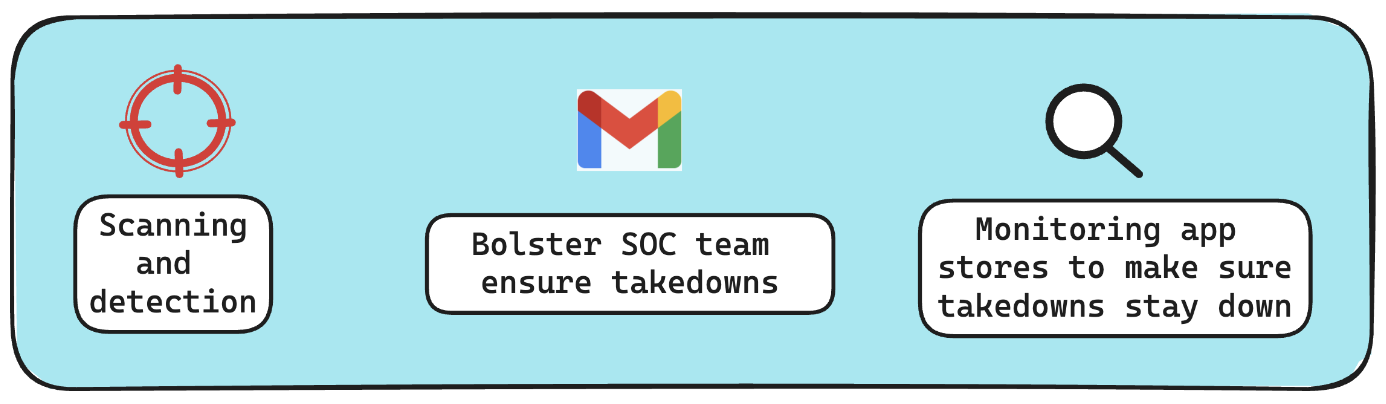

At Bolster, we relentlessly protect some of the biggest brand names from these malicious attacks through our App Store Monitoring and Fake App Takedown module. We hunt the scam apps and work together with app store hosting and domain providers to take them down.

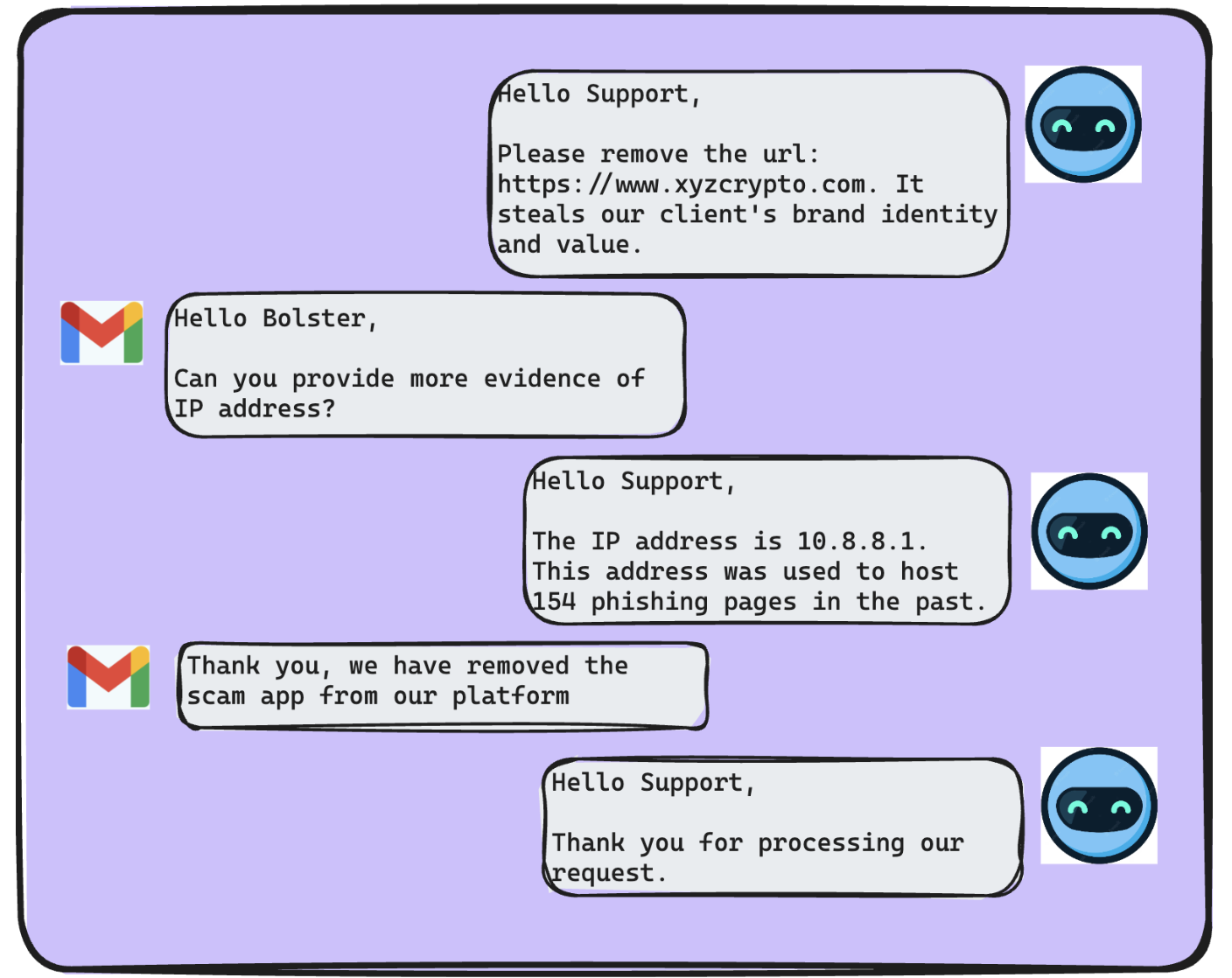

Once the Bolster engine sources the malicious app URLs after scanning and detection, our team of analysts reaches out to hosting or domain providers of the app stores requesting a takedown. These providers require evidences like image screenshots and URL to conduct an internal investigation, and upon confirmation of the veracity of the claim they remove the apps from their platform, thus protecting our client.

Natural Language as a Stakeholder in Fake Mobile App Takedowns

The key to swift takedowns is a decisive and comprehensive outreach from analysts, similar to the workflow the Bolster team follows, to the app store providers. The email correspondences play a large role in converting these requests to actual takedowns.

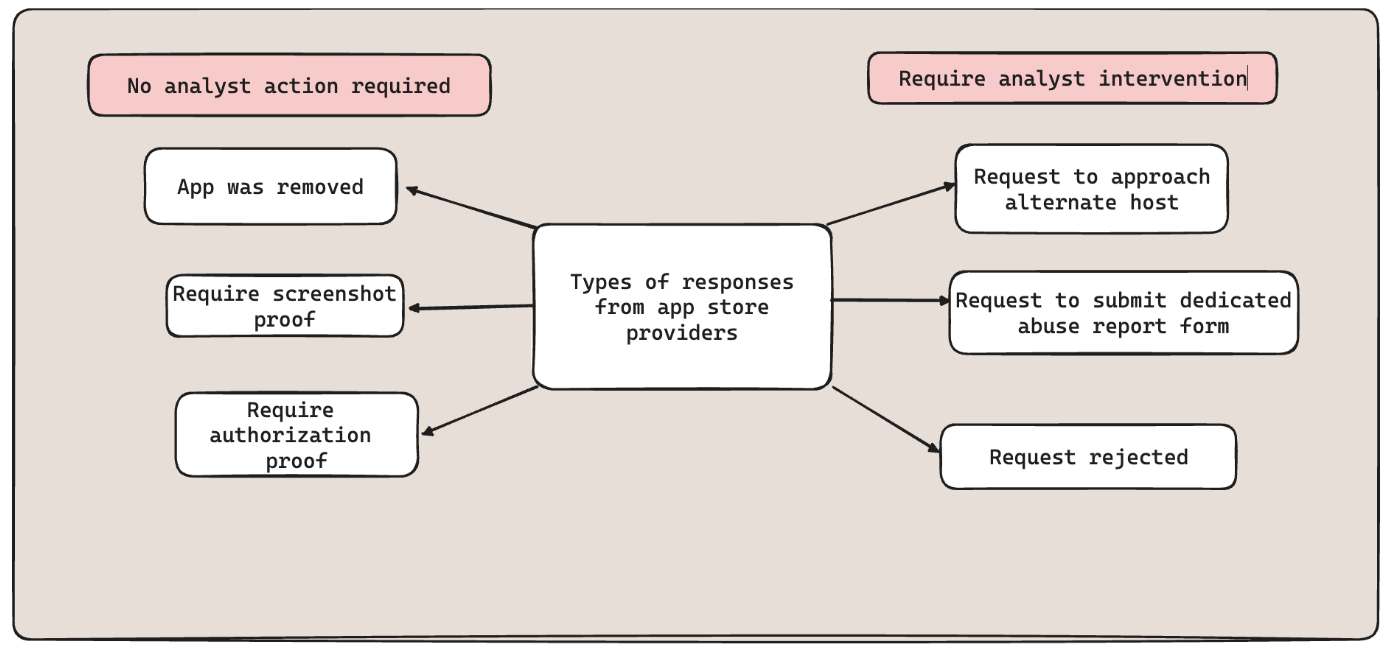

With an initiation, we reach out to app store providers with evidence and proof of authorization from the client to act on their behalf. This kicks off a conversation between the SOC team and app store provider teams. These conversations can involve correspondences from providers regarding a straightforward takedown, asking for further evidence, requesting making contact with alternative hosting providers or in some cases a rejection of the takedown request.

At Bolster, our SOC analysts have countermeasures to handle all these cases, and respond to the providers accordingly. These correspondences are often manual on both ends and utilize a significant amount resources in time and effort. However majority countermeasures don’t require an analyst intervention and can be executed by a software pipeline.

Large Language Models and generative AI to the rescue

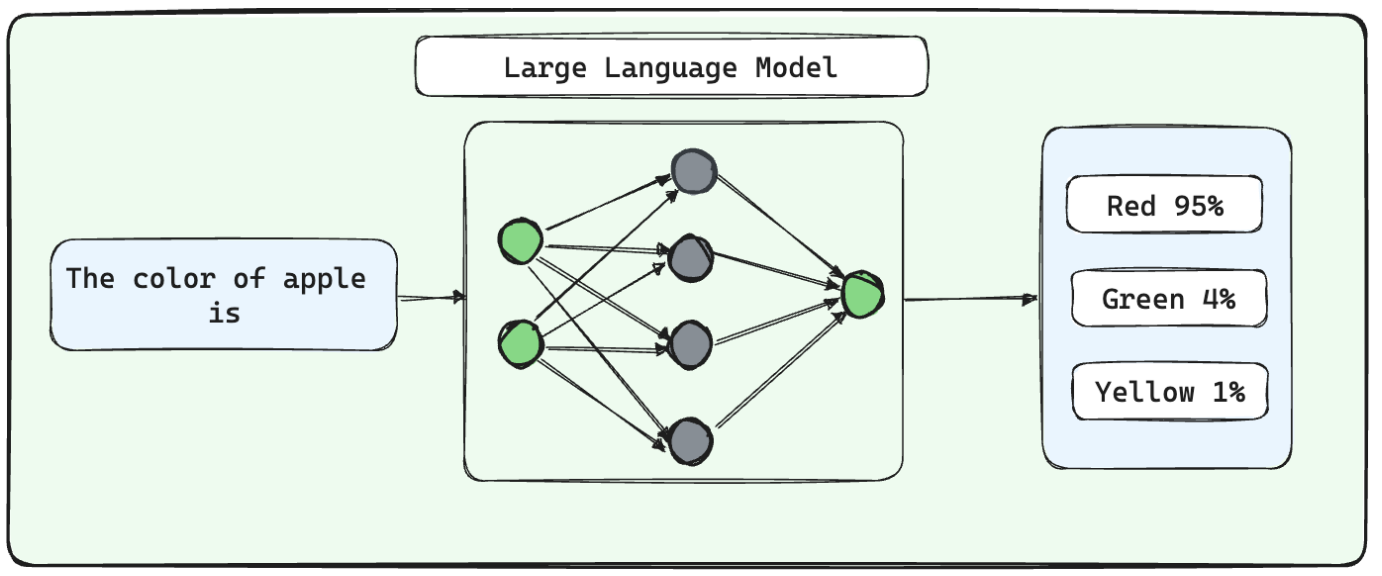

Ever since the launch of ChatGPT to the masses, the landscape of AI has changed. Tools enabling conversational and generative AI have penetrated deep, and companies are actively exploring ways to integrate these tools into their processes. ChatGPT uses the powerful GPT3.5 and GPT4 models, which belong to the family of Large Language Models (LLMs). These models are trained on gigantic corpus of publicly available natural language data. This data comprises wikipedia articles, reddit threads, books and articles. These models are probabilistic in nature and tailor made to understand the rich nuances of human language, draw connections between the words and interpret the contexts. The basic principle to train these networks is to help them learn prediction of next words in a sentence.

Our App Store Monitoring and Fake App Takedown modules tackles this issue by deploying LLMs directly into production through an end-to-end automated software pipeline to conduct takedowns in bulk. The pipeline is capable of sending out automated emails requesting the takedowns at scale and also monitor the email inbox for responses from the hosting providers for these app stores.

Once a response is received, our pipeline extracts the text body of the email and triggers the deployed LLM model. Our LLM module analyzes the text and decides upon an appropriate response. If the response action does not require involvement of an analyst, such as closing the ticket or providing more evidence, LLM takes the action unilaterally, prepares and send out the response.

In special cases where a response action requires an expert involvement to resolve the case, the LLM raises an alert to the SOC analyst teams to take control of the situation.

Strategies For Integrating LLMs into Your Fake Mobile App Takedown Workflow

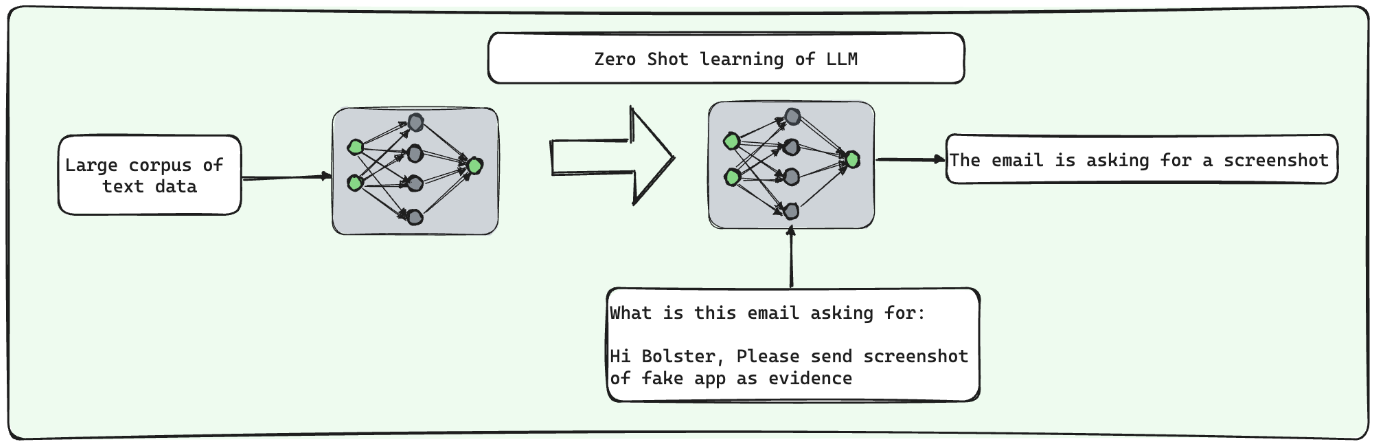

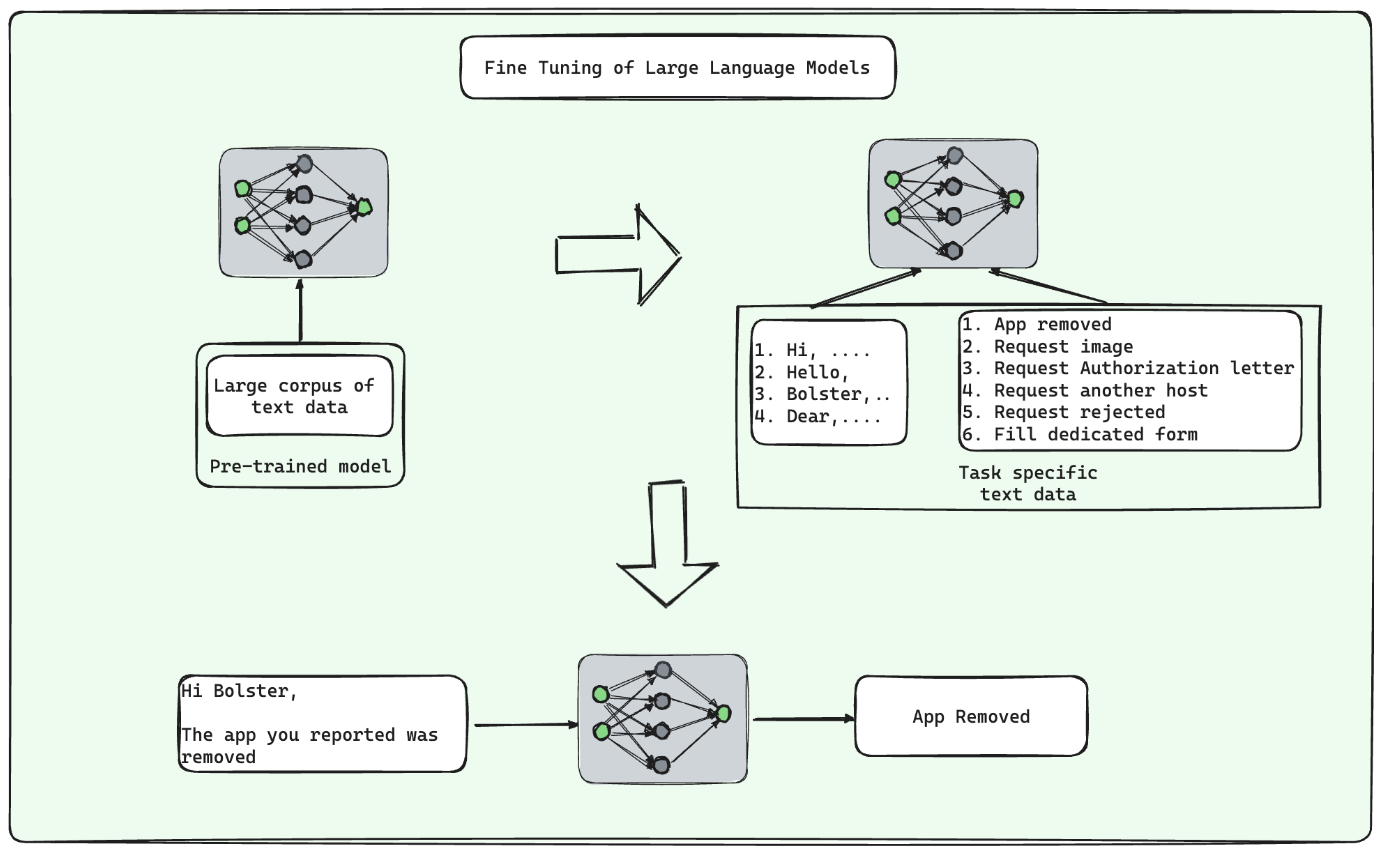

Zero-shot inference and fine-tuned inference are two distinct methods for leveraging Large Language Models (LLMs) like GPT-3 or its successors to perform specific natural language understanding tasks.

Zero-shot inference involves using a pre-trained LLM without any task-specific fine-tuning. In this approach, you present a prompt or input to the model, and it attempts to generate a relevant response based on its general knowledge and the patterns it has learned during its initial training.

Zero-shot inference is versatile and can be used for a wide range of tasks without the need for task-specific training data. However, its performance can be limited when tackling complex or domain-specific tasks, as the model’s knowledge may be too general to provide accurate or contextually appropriate responses.

Conclusion

For the end to end pipeline for the fake app takedowns, we have employed the fine tuning strategy to train and deploy our LLM to handle the email correspondences.

The fine tuning allows us to use a pre-trained LLM’s generalization capabilities and customize it to solve the inference problem on the custom use case of handling email based conversations in the fake app takedown context. The LLM module helps our fake app takedown achieve full automation, from initiation to response handling.

This optimized workflow boasts of features to initiate of takedowns, receiving and analysis of responses using our custom fine tuned Large Language Model and produce natural language email while undertaking the required action. Apart from this, the workflow is also capable of alerting SOC team to take control of matters if and when needed.

To learn more about how Bolster’s automated, machine-learning technology can help prevent against fraudulent apps, request a demo with our team today.